Machines vs. Humans: Is technology the answer to everything?

We review the opinions of journalists and authors on this question.

Available in:

Techno-chauvinism refers to the belief that technology is always the solution. It reiterates the idea that technological development and artificial intelligence are changing how journalists discover, analyze and disseminate news.

During the IA Journalism Festival, held virtually in December 2020, researchers and reporters worldwide presented some of the innovative and eye-opening work done with Artificial Intelligence (AI), ranging from creating new story forms to better identifying a news organization’s editorial biases.

An example is how several media outlets, such as Reuters, AFP, Nikkei, and La Nacion, collaborated on a pilot program called AIJO Project that used AI to detect binary gender bias in their reporting. Through this project, over the past few years, several organizations have been investigating machine learning to examine gender representation in the media.

Project AIJO’s artificial intelligence software scanned thousands of articles to detect and categorize both story images and interview quotes to see how balanced news coverage was when giving men and women equal representation. Thus, Pew Research found that women are underrepresented in Google image searches. The results were grim: women only appeared in 27.2% of the images used in the articles, they constituted 21% of the people cited and only 22% of the sources cited, according to Adrian Ma, professor at the School of Journalism at Ryerson University and a participant in the project. The team also learned that citations from female sources were shorter than those from men.

This type of AI development has exciting potential, significantly as the algorithm expands to include other variables. Empowering newsrooms with the ability to measure the diversity of their content quickly could help achieve more balanced and representative coverage, something the industry has long failed to do. For further information on this work, see the video recording of AIJO Project members Agnes Stenbom and Issei Mori presenting the group’s findings.

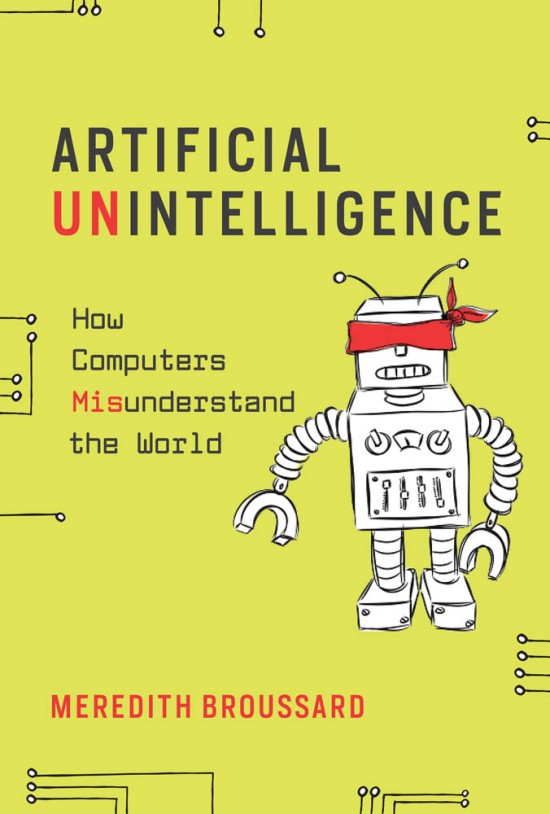

Despite the above, not everything indicates that technology will help to reduce existing gaps. The book “Artificial Unintelligence: How Computers Misunderstand the World” by Meredith Broussard argues that it is simply untrue that social problems will disappear thanks to a technological utopia. The book analyzes, using artificial intelligence, why students can’t pass standardized tests and demonstrate why trying to fix the US campaign finance system by building artificial intelligence software will not work.

Humans often perceive AI as inherently superior to their minds, utterly free of earthly flaws and fallacies. However, according to Meredith Broussard, all technology is fundamentally influenced by the beliefs and biases of those who design it.

The ultimate goal of AI is to create a general intelligence that can adapt to various situations. Building such a computer is a far cry from even the most advanced software being developed nowadays. Current AI applications, such as machine learning, are all forms of narrow AI focused on mastering particular tasks.

Broussard presented her critical look at contemporary media’s infatuation with AI at an event for the Series of Feminist Speakers and Accessible Publishing and Communications Technologies. First, the author advised the audience to understand what AI is: A machine designed by primarily male scientists that only knows as much as it is taught. Further, she posited that governments should establish a federal consumer protection agency to audit and regulate the algorithms regulating everything from social media to healthcare decisions.

People have long believed that computers are more objective or more unbiased than people. Authors show that this is not always the case. One must think about what is the right tool for the task at hand. Sometimes it’s a computer; sometimes, it’s not. Either way, human judgment and the context in which decisions must be made should always be taken into account. For example, if you have to determine whether a person has a disease or not, the context will be essential, and machines do not always consider it. We can use computers to make certain types of decisions, but not others, and that is fine.